Key Recommendations

There are many factors that determine success in large-scale projects. But what is surprising is that management and motivation, combined with measurement and tracking of the right things, explains success far more than selection of tools or specific methods of work that are employed within the systems development function. All too often we find well-organized teams, using the most advanced tools and methodologies that nevertheless are not successful. As a result, we have focused on the management and leadership skills that are required. In addition, we have identified a strong problem with governance of large-scale projects and have been able to formulate recommendations that address these issues as well. Putting all of these factors together will significantly raise your chances of success or help you turn around a project that is teetering on the brink of disaster.

Make sure the business vision has been clearly articulated and championed by senior executive management. The fundamental building block for a successful large-scale systems effort is senior executive management support. The champion for the project must come from this group. Without this important element, the likelihood of failure is very great. Systems of this magnitude need to be defined in terms of key business outcomes. It is by focusing on the business outcomes that the meaning of the large investment required for different systems becomes clear. Outcomes needs to be defined not in terms of process or an other inwardly looking criteria, but rather in terms of specific deliverables of business results in the market or with customers. If the organization is unable to define these factors for the system, then consideration should be given to not building it.

Place the program under a leader with obvious business “subject matter” credentials, and credibility within the enterprise. Leadership of large-scale systems efforts is critical in getting success. What kind of leadership? A common mistake is to treat the effort as though it is a systems project. It is not. It is a business project, and should as a result be led by a well-recognized business leader. Picking the leader is critical, and is not done by the IT group. Instead, it is an enterprise matter and senior executive management needs to be part of the consultations. Picking a senior business executive to lead these projects is a strong signal for success. It makes it easier to communicate systems initiatives to others in senior management and to various business function elements whose cooperation is needed.

Establish an effective “governance” linkage to executive management. Failure comes from lack of a clear governance model that links together the IT group in charge of the project and the executive management that ultimately is responsible for signing the checks for funding. The purpose of the governance model is to build into the process a system of collaboration between IT and executive management that insures continued funding. This continued support comes in the form of consultations that continue to clarify the strategic mission of the project, and to review the continued change in its strategic dimensions. Sometimes external factors, for example, change, leaving the IT shop without any clear way to adjust their efforts. The business senior management is generally charged with being outward-looking and is responsible for monitoring external competitive threats and other market developments, including emerging opportunities, in order to provide constant steering of the systems development process for large-scale systems.

Assure the presence of in-depth business function membership on the project team. Another failure factor is a project that does not have business function membership on the operational side of the project. Successful projects keep this part of the governance structure in place, and ensure that the business function representatives are constantly engaged as the system is created and rolled out into the organization. The most common problem is the “fair weather friend” syndrome, when the business function leaders are there at the beginning of the project, but then disappear or divorce themselves from the effort the moment a problem appears, or there is the slightest hint of difficulty. “It’s not my project – I would have done it differently,” says the back-biting business function leader. “They want to do it that way, then let them pay for it.”

Maximize continuity among the project team participants. Even short-term turnover is a major problem for most IT shops. For long-term projects, the problems magnify. Only a few original members of a project are around to see the project through to its conclusion. A key best practice in this regard is to work hard and carefully at retaining the senior talent in any large-scale job. This is not to say that all talent available should not be preserved and sheltered, but continuity among team members is a major success factor in staying on time and budget for projects. The nature of large-scale systems is such that the cumulative effects of a series of small delays from various sub-teams can add up to really large delays overall, particularly when the critical path is changed – as it almost always is. For smaller projects, this issue can be dealt with on an ad hoc basis, however, for large-scale projects, the ripple effect can become debilitating. Therefore, a formalized system for maintaining continuity of participants is a critical element.

Implement stage gate reviews with executive management. Stage gate reviews are points in the project where the organization makes a major assessment of its continued viability. It has its parallel with the milestone process in classical project management. Stage gates are defined so that a clear message can be formulated for executive management in the company. It is their continued support upon which rests the funding and mission of the effort, and they need to be kept constantly informed regarding the status of the project. In this way, funding is assured, and the higher elements of strategy are continually injected into the systems delivery process. This is in contrast to the practice of letting a large-scale systems effort gradually sink into oblivion, away from the eyes of executive management. The stage gate review process needs to be scheduled with executive management far in advance, and kept on their calendar. A sense of importance and mission must be maintained, so that actual executive management, not lower-down representatives are sent to participate in these meetings.

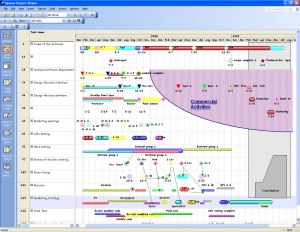

Track and publish schedule results and costs. Good program management rests upon carefully watching expenditures for each stage in the process. A best practice is to publish the results regarding time and budget to all persons involved in the project – both on the IT as well as on the business side. What is the effect? This helps all parties involved to realize the critical nature of the effort that is underway. Doing this usually helps to motivate the various teams on the critical path, and to increase a general level of awareness of the project, thus stimulating overall efforts at cooperation, something sorely missing from many projects. In most organizations, it is human psychology to band together even more tightly into cooperative teams when there is a sense of urgency and a pressing business-critical mission that needs to be accomplished. In contrast, it is the silent projects that die a silent death, unnoticed by the larger organization.

Assure the effectiveness of mechanisms to coordinate with other projects. Large-scale systems projects are never an island unto themselves, but rather exist within the rich context of many other initiatives that are going on, some of which occasionally may have higher short-term priorities. It is necessary to ensure that any large-scale systems effort is integrated into the planning for other smaller projects that may be underway. The purpose of this is multifold. It is useful in ensuring allocation of resources, both funding, and skills, among the various projects. It is also necessary in order to ensure a consistency of architecture. This coordination process needs to be constant, and be built into the overall governance mechanism of the project.

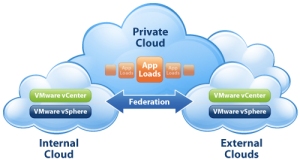

Assure effective coordination in planning the Technical Architecture. Due to the enterprise-wide nature of large-scale systems, it is often the case that different systems (and sub-systems) overlap or even conflict with one another. This is why the role of the chief architect is so important. Coordination planning around architecture is one of the key high-level tasks of management for a large-scale systems implementation. This process is not a one-time step in a long sequence of systems development events. Rather, it is a constant point of review that is done as a punch list element for each major step along the way. In this way, many problematic issues are resolved before they occur.

Does the project team have adequate technical staffing and liaison? Throughout a lengthy project, the skills required will change. This factor combined with the inevitable turnover in IT organizations, and the long-term nature of a large-scale project introduce a structural uncertainty into any proposed project. We have seen that between different sub-projects, there is always the challenge of distributing adequate technical and specialized talent effectively. This in itself is a serious management challenge. Most projects at the same time are forced to rely upon outside contractors for major pieces of the work. Finally, all of the teams involved – either insiders or outsiders – must be set into a program management structure that will facilitate adequate communications with business. The best practice is not to set this type of communication as a policy, but to systematically build it into the schedule of work so that there is little doubt it will take place. An additional benefit is that this type of scheduling ensures that eventually this type of exchange of important information becomes an accepted part of the corporate life-style. This, in turn, helps to inculcate these same values into the different sub-contractors who might be working on various projects from time to time.

Assure that effective escalation processes are in place. Since large-scale development programs are composed of different projects, and these projects in turn are sometimes composed of sub-projects, inevitably there arise conflicts between different teams. Disagreements over different technical solutions, cross-team effects of one solution spilling over onto another, and the struggle for resources that must carefully be allocated across and between different teams – these are only a few of the issues that spawn disagreements. From the very beginning it is best practice to design into the organizational structure of the project management group the specific ways for escalation to take place. This will ensure that a coherent decision-making structure is put into place.

Make change leadership a planned and visible component. All too many IT shops take on large-scale systems development projects and gradually allow them to slip out of visibility to the business side of the house. The danger in this behavior is that IT eventually comes to be seen as the “owner” of the project. In reality, of course, it is the entire business (not IT) that is the owner of the project. In order to ensure this idea remains at the forefront of people’s minds, leadership of change is engineered into the program management of the project. Visibility is important in order to continually refresh corporate learning and consciousness of the changes that are taking place. In addition, it helps the IT team remain in contact with the business so that important information can be picked up regarding anticipated changes in strategy.

Actively envision and plan for the “Living There” stage – supporting the new environment and harvesting the business benefits. Effectively conducting a large-scale development effort is more than the day-to-day operations involved with program management and reporting. The best projects have in their field of vision a picture of how the system will operate when it is completed – not only a technical vision, but an operational vision encompassing the business process that will out of necessity change. In order to harvest business benefits, companies prepare for the completed system and how it will be used a long time in advance, instead of waiting until the system is near completion, then worrying about how it will be used and implemented into business processes. When companies wait too long before focusing on the end state, we have found their chances of success drastically reduced. Envisioning the future, and actually training for the future, needs to be built into the systems development process from the very beginning, or at a minimum from the middle of a project. This will help ensure that everyone is on board and that the organization will be prepared to take immediate and full advantage of the system once it finally is ready (see Project ES: Implementing Enterprise Systems for details regarding the different stages of ERP rollouts).

Assure ongoing communication and dialogue with the target business operation – manage expectations. The politics of large-scale systems implementation require constant communications and expectations management. This must be systematically maintained throughout the project, so that towards the end – or at any other crucial moment – it is possible to get the support and funding needed to accomplish milestones in the project. Management of expectations is a large part of this effort. Pace, timing, and verification of benefits to end-user groups needs to be controlled carefully so that the system does not develop a poor reputation before it is even completed.

Article by Shaun White http://www.sacherpartners.eu